What I learned: Deep Learning Specialization - Deeplearning.ai

By Jan Van de Poel on Feb 16, 2018

Course: Deep Learning Specialization

By: Andrew Ng - Deeplearning.ai (Stanford, Coursera, ex-Google Brain, ex-Baidu, landing.ai)

Six years to the day - 15 August 2017 - after releasing his first Machine Learning course, Andrew Ng launched the Deep Learning Specialization on Coursera, with the ambitious goal:

I hope we can build an AI-powered society that gives everyone affordable healthcare, provides every child a personalized education, makes inexpensive self-driving cars available to all, and provides meaningful work for every man and woman. An AI-powered society that improves every person’s life. Link.

The course promises you to:

…learn the foundations of Deep Learning, understand how to build neural networks, and learn how to lead successful machine learning projects.

and that was exactly what I was hoping to learn after completing the machine learning introduction, so it felt like the most natural fit. Additionally, I was looking forward to get my hands dirty in writing some Python code, code I would actually be able to use later on.

Prerequisites

The material is accessible regardless of what level in deep learning you are at. \

Preferably though, you have knowledge of programming and Python in particular (imho any type of scripting language should be enough to learn the required Python along the way).

By now, this goes without saying but there is an obvious focus on math. You don’t have to be a linear algebra expert, but knowing and manipulating matrices is definitely a plus.

Content review / what will you learn

The specialization is split into five individual courses:

1. Neural Networks and Deep Learning

This introductionary course will discuss what a (deep) neural network is, and why and how it works. You will develop an intuition for back propagation, which might seem daunting but once you suffer through it, it’s a valuable asset in your toolbox.

The material will guide you through activation functions, (hyper)parameters, Gradient Descent and programming your first deep neural network in Python, as well as a “cat-vs-no-cat” classifier - you’ll be a hit on the internet :).

2. Improving Deep Neural Networks

After the introduction you will learn some practical considerations to building neural networks. Again, it is all about the fundamentals here. You are mastering the building blocks to later apply in the state of the art networks you will be building.

You will study how to set up your ML application, regularization, optimisation, algorithms, hyperparameters and batch normalization. You will also take your first steps writing a progrom using Tensorflow, which is an open-source machine learning framework for everyone from Google.

3. Structuring Machine Learning Projects

By the start of the third course, my fingers were aching to start manipulating pictures and detecting objects. For me, I was in luck, this module was a rather short one, nevertheless, also rather important for real life projects later on.

It will teach you how to define a goal and how to evaluate your performance, and introduce you to Transfer Learning, to reuse existing models to retrain your own. Don’t worry, it’s not as daunting as it sounds, and creates some interesting opportunities to train models quicker (and cheaper).

4. Convolutional Machine Learning Networks

For me, this is where the magic happened. This course is a rather elaborated one, but contains so much goodies it’s almost hard to summarize.

You will start with the fundamentals of convolutional networks, which sounds hard, but the videos will make it easy to understand. Here is were the teaching skills of Andrew Ng shine, step-by-step he will take you through all the steps of what makes a convolutional network: filters, strides, padding, pooling…

By the end of week 3, you will have learned about and implemented modern techniques for object detection, using YOLOv2.

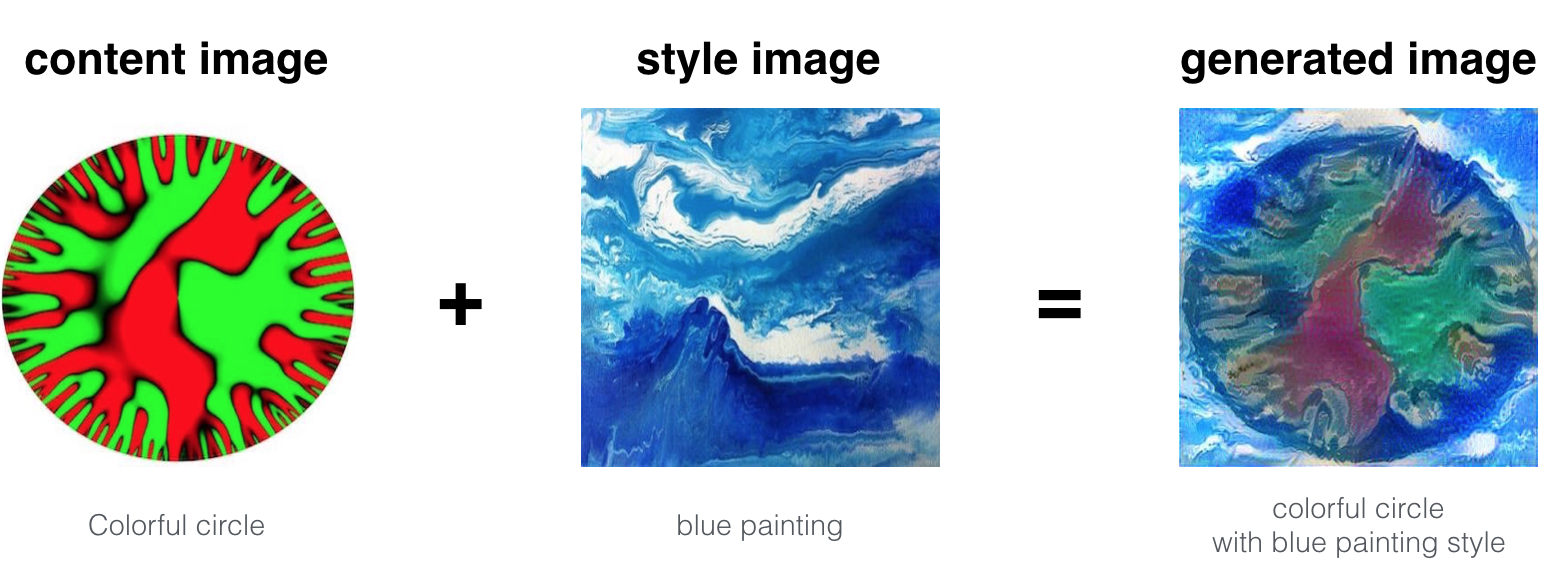

In the final week, you’ll study face recognition and neural style transfer, applying the style of one image to the content of another:

5. Sequence Models

To be honest, this module was the one that appealed the least to me, until I finished it. Much as the previous courses, you will start with the concepts of recurrent neural networks and quickly move to GRUs and LSTMs, don’t worry, Andrew Ng does an excellent job at talking you through all of it.

Once you’re done, you will be able to build a Trigger Detection systems (“Ok Google”, “Hello, Alexa”) or do translations. It still amazes me how much ground gets covered in each module.

6. Bonus

As a bonus in each course, there is an interview with a hero from the Deep Learning community:

-

George Hinton: the Godfather of AI

-

Ian Goodfellow: the inventor of GANs, basically generating content by having two networks compete with each other

-

and many more…

What did I actually take away

After the course I felt confident to explore deep learning further. I started looking for more practical courses to start more real life drills to master the theoretical concepts I had learned.

I experienced firsthand that debugging neural networks is different from finding issues in my “normal” apps/software, and will hopefully improve over time to get issues fixed faster (matrix sizes, convergence, architecture issues…).

As I am writing this about a week after completing the course, it is finally starting to sync in to what extent the concepts and examples offered in the specialization will be applicable in the real world. Nevertheless, there is still a lot of ground to cover…

Pros and challenges

Pros

1. Quality material

The quality of the videos and programming exercises is up to par, which is what you would expect from a paid course.

The only remark is that the (at the time of writing) recently released Sequence Models exercises felt a little rougher around the edges and I really relied on help in the Forums to solve problems I was having. They also took me longer to complete than expected.

2. Structured approach

The content is structured to take you by the hand and guide you through the entire class. It is never confusing as to what to do next. And there is positive feedback of what ground you already covered.

The videos explain the concepts, the quiz challenges you to think a little bit harder about what you (should) have learned and the programming exercises take you by the hand through your first implementation.

3. Solid teaching skills

As already stated on this blog, Andrew Ng has a way of conveying difficult concepts in an easy to understand manner.

4. Ready to roll

To complete the exercises, there is no need to set up a server or programming environment yourself, which allows you to focus on learning and implementing the concepts. Obviously, at some point you will have to go through the steps to create your own environment, but there is no need to do that here, and you’ll be able to focus solely on the content.

5. Deep Learning toolbox

Once you’re finished, you’ll have a deep learning toolbox you can start to use on real life experiments. You’re not an expert yet, but you have the tools to start training for it.

6. State of the art

Most of the materials come from important papers on state of the art techniques, which are presented in an easily accessible fashion. Later on, it might be useful to go through these papers in more detail yourself.

Challenges

1. Handheld courses

Handheld exercises are great to understand the concepts, but you will have to put in more effort to understand the bigger picture.

A lot of the heavy lifting is done for you (data collection/prepartion, code) and you need to realize you will need to internalize those parts as well.

You will understand all the building blocks of the algorithms and optimisations, but you’ll need to dig deeper to set up your own machine, collect and prepare all the data, select the right framework and put your solution into production.

Time spent

Ultimately the lead time will depend on how much time you can invest each day/week and the skills you have.

I found the exercises to sometimes take longer than advertised, and a few times I got stuck on getting my exercises pass the automatic grader. Thankfully for the mentors and other students on the forums.

Cost

The Deep Learning specialization is not free, but sometimes value comes at a price (+ there is financial aid available).

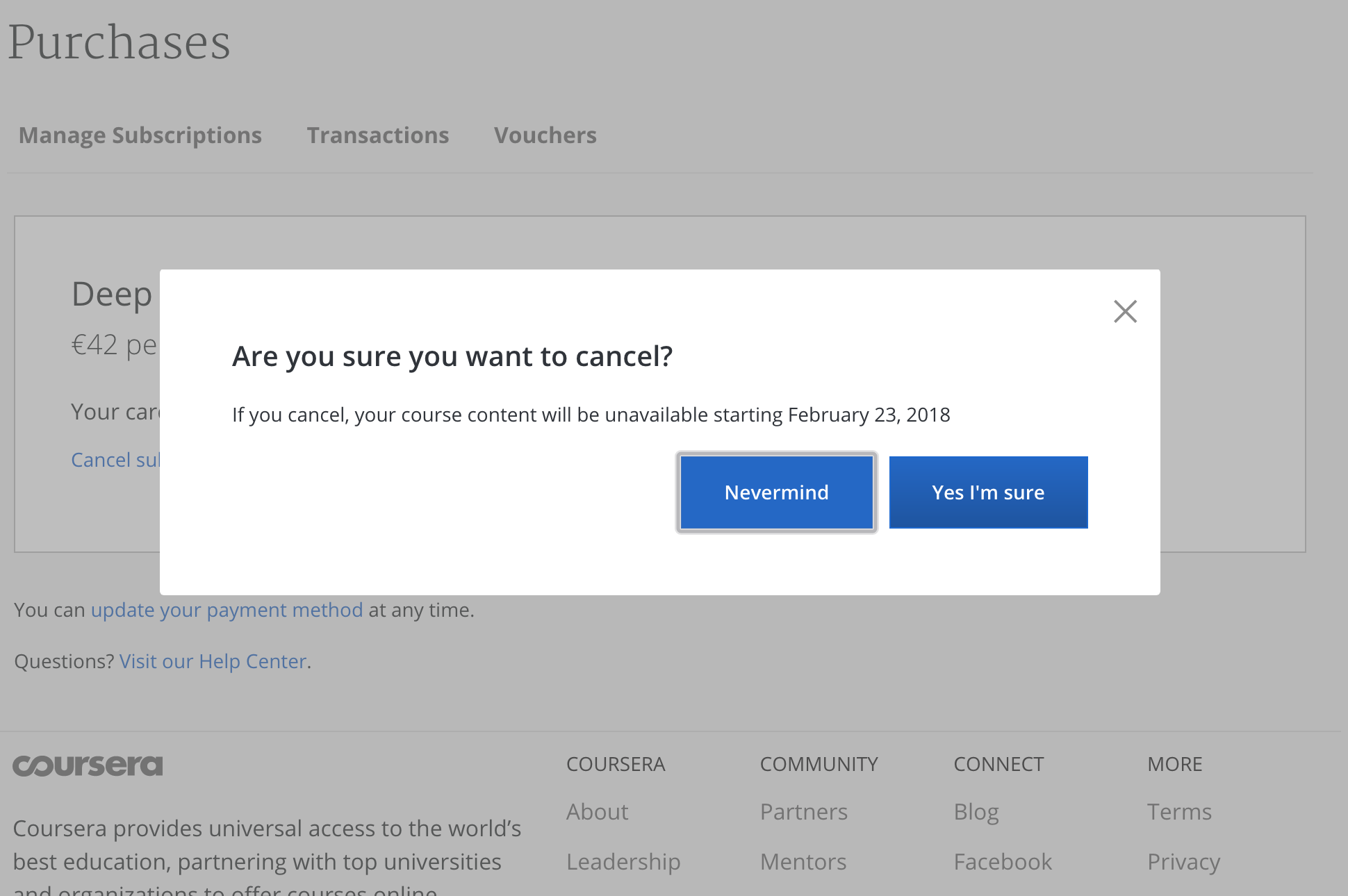

I freed up some time to push through the content and exercises to limit the cost. I found the wording on Coursera’s help documentation a bit confusing, so I did the test. Once you cancel your subscription, you will loose access to all material when the subscription period ends.

Here’s my advice:

Pre subscription

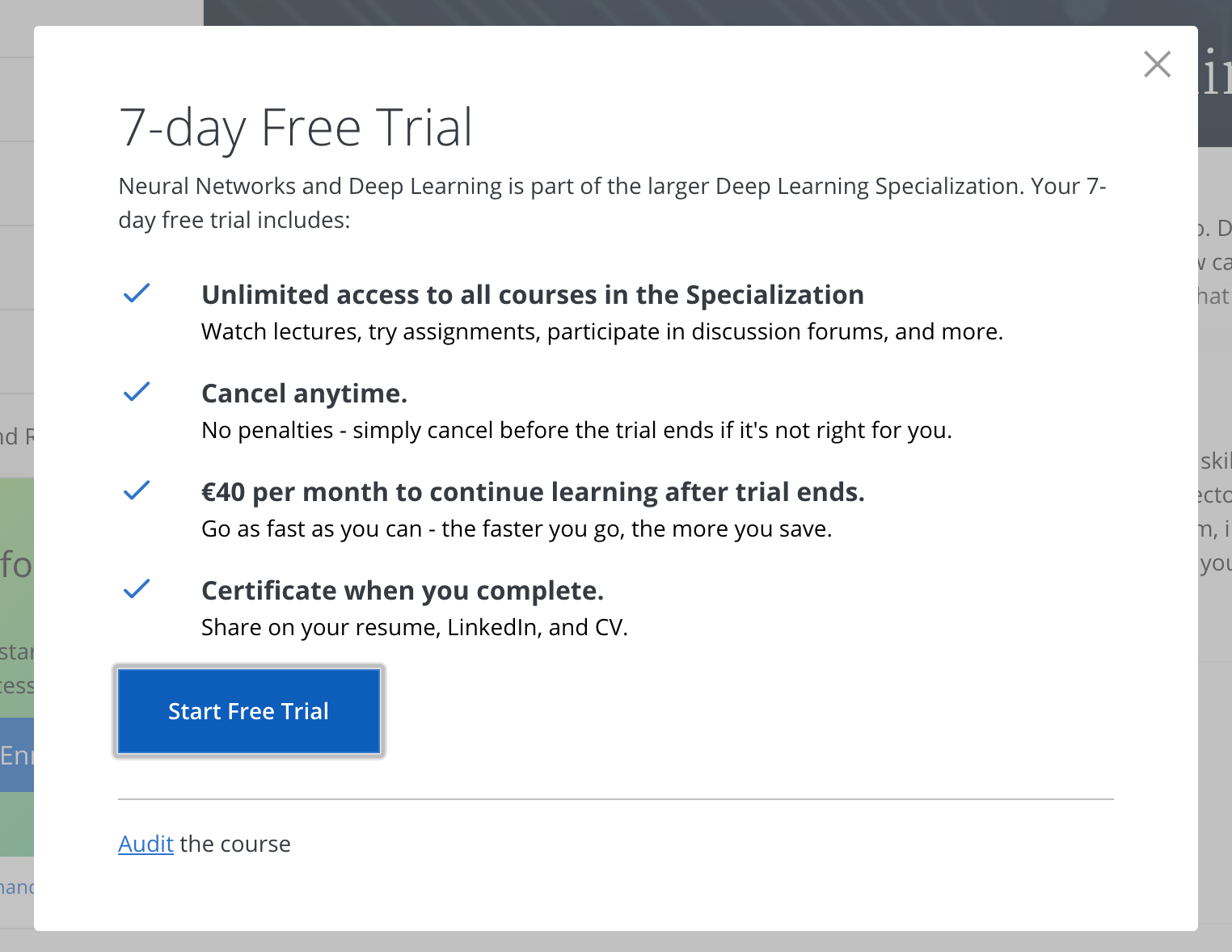

The specialization has a 7 day evaluation period where you get full access. You can get a lot done in 7 days, but unless you can spend your entire day on there, it’s unlikely you will finish it this way.

All the videos are available in the “audit course” option, which means you don’t have to pay, but you also don’t have access to the exercises.

During your subscription

Once you’ve subscribed you have access to all the material: videos, quizzes and exercises.

Note: If you wish to revisit a previous notebook, you might need to switch sessions to access it again.

Once the course is completed

Once you’ve completed the course, it makes no sense to keep paying the monthly fee. As mentioned, you can access the video material via de “audit option”. You might need to re-enroll to achieve that.

You can also download the notebooks to run them locally. It took me a while to find out how, and I’ll add a blog post to detail that process, so you don’t have to.

Conclusion

Simply put:

It’s a great course to get you up to speed with the current state of deep learning by one of the leading people in the field.

Once completed, you should feel confident to keep exploring, but you will definitely need to invest more time to get all the learned material under your belt. It’s a bottom up approach, providing all (most of) the building blocks you need.

Once you start, try to keep up the pace in order to keep cost low.

I would definitely put in the effort again!